Artificial intelligence has already revolutionized many fields by enabling the exploitation of data to predict complex phenomena.

In 2022, Statista valued the global artificial intelligence market at $327.5 billion USD (Artificial Intelligence – Facts and Figures), within a context of continuous growth.

While some applications of artificial intelligence are already well-known, there are others that are less recognized but have significant development potential. This is the case in the field of music. Sound is a unique type of data that doesn't have the classic tabular structure; it's a waveform. Using sounds as input data for a machine learning model requires processing and measurements on the waveform, which have advanced considerably in recent years, particularly thanks to the Python library Librosa.

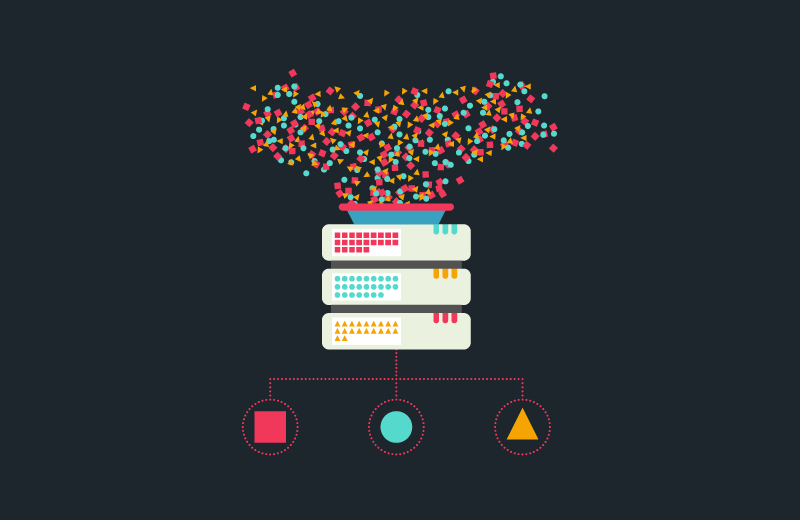

The field of music benefits from numerous opportunities thanks to artificial intelligence, with possibilities for automatic classification of styles or automatic generation of music from examples. Generating music with artificial intelligence poses the challenge of accounting for temporal events. Classic machine learning models such as feedforward neural networks are not well-suited for this. As illustrated in the diagram below, such a model designed to generate a note following an existing melody would make the same prediction if given either Do-Re-Mi-Fa-Sol or Sol-Fa-Mi-Re-Do, because the notes are the same and this model doesn't consider the order in which they appear. However, for a musician, these are obviously two different melodies, and the possibilities that come to mind for continuing them are, of course, not the same.

Diagram of a 'classic' neural network

For artificial intelligence to adapt to this situation and consider things as a musician would, the sequence of notes must be treated as a time series. This immediately rules out most classic machine learning models and necessitates more sophisticated models, such as recurrent neural networks (RNNs) or Long Short Term Memory (LSTM) networks. These models have the capacity for both short-term and long-term memory, enabling them to account for the order of notes in a long melody when predicting the next note (recurrent neural network). Here is an example of how a recurrent network operates: it receives a melody as input, with each note on a neuron in the input layer. Unlike the classic network, it considers the order in which the notes appear, allowing it to process the melody in a manner akin to a musician.

Diagram of a « recurrent neural network »

Such a model imposes more complexity for the data scientist but is theoretically capable of finishing a piece from its beginning or generating music in the style of examples it has learned from. For instance, it would be possible to train a model with all of Mozart's piano sonatas and use it to generate piano music in Mozart's style. A perfectly trained model might give the impression of having done 'as well as' Mozart himself to the ear, but the artistic value of such an approach should be viewed with caution, as this model would only be an imitator of Mozart, since it learned from the music written by the composer. It would be very risky to equate a genius with an imitator of that genius. To claim that an artificial intelligence has truly done 'as well as' Mozart, it would need to generate music of the same quality and innovation as the composer without having used any of Mozart's music for training. The model would need to have learned only from music strictly prior to 1756 (Mozart was born on January 27, 1756, and began music almost immediately!). It must be acknowledged that current machine learning techniques do not offer this capability. However, if 'all thought is the result of calculation,' there must be a way to mathematically reconstruct the thought process of a genius when creating something completely new.